VMware provides a vRealize Operation (vROps) Management Pack for Kubernetes that supports Prometheus, documentation is accessible here. As per the Prometheus documentation, currently only basic auth is supported. Consequently, this authentication method has been certified in the Management Pack. However, OpenShift Container Platform (OCP) comes with an internal Prometheus instance that only supports token-based authentication. In this post I am sharing how to enable the Management Pack to integrate with the OCP internal Prometheus instance. The solution is pretty simple, we use a reverse proxy (NGINX in my case, but any reverse proxy would do the job) between vROps and OCP internal Prometheus. The reverse proxy is needed to perform token-based authentication on behalf of the Management Pack.

This solution is validated against the following product versions:

- vRealize Operations 8.6

- Management Pack for Kubernetes 1.7

- OpenShift Container Platform 4.7

It is my understanding that the same approach would be supported for the following versions:

- vRealize Operations 8.5 and above

- Management Pack for Kubernetes 1.6.1 and above

- OpenShift Container Platform 4.7 and above

Requirements

- Create a Service Account in each OpenShift Container Platform instance as per doc. The Service Account needs the following grants:

cluster-monitor-viewandcluster-reader - Deploy a NGINX server (in my case running on a Linux VM, but nothing stops you from having it deployed on OCP)

- Configure the NGINX (

nginx.conffile) with a server context for each OCP cluster instance to be monitored - NGINX needs to be configured as reverse proxy for the / location as currently per my understanding other locations are not supported in the Management Pack

You can find a lot of examples how to configure NGNIX, this is the official NGINX documenation. Here after I am reporting an example of specific NGINX server context configuration in the nginx.conf file that we need in order to enable our integrations.

server {

listen 8081;

location / {

set $token “<your-ocp-instance-service-account-token>”;

proxy_set_header Authorization “Bearer $token”;

proxy_pass <your-ocp-prometheus-instance-url>;

}

}

A server context as reported above must be created for each OCP instance to be managed with vROps, please make sure to use different listen port for different server contexts.

Use the following instructions to retrieve both the Service Account token and the Prometheus URL for each OCP instance.

Login in the OCP instance using CLI:

$ oc login -u <your-username> -p <your-password> <ocp-instance-master-url>Get the Service Account token:

$ oc sa get-token <your-sa-name> -n <your-sa-namespace>Get the Prometheus URL:

$ oc get route -n <youe-prometheus-namespace> <Prometheus-route-name> -o jsonpath='{.status.ingress[0].host}')Configure the Management Pack

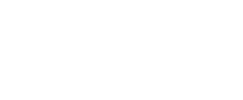

In the vRealize Operations console, configure an Account for the MP for Kubernetes for each OCP instance as in the picture below.

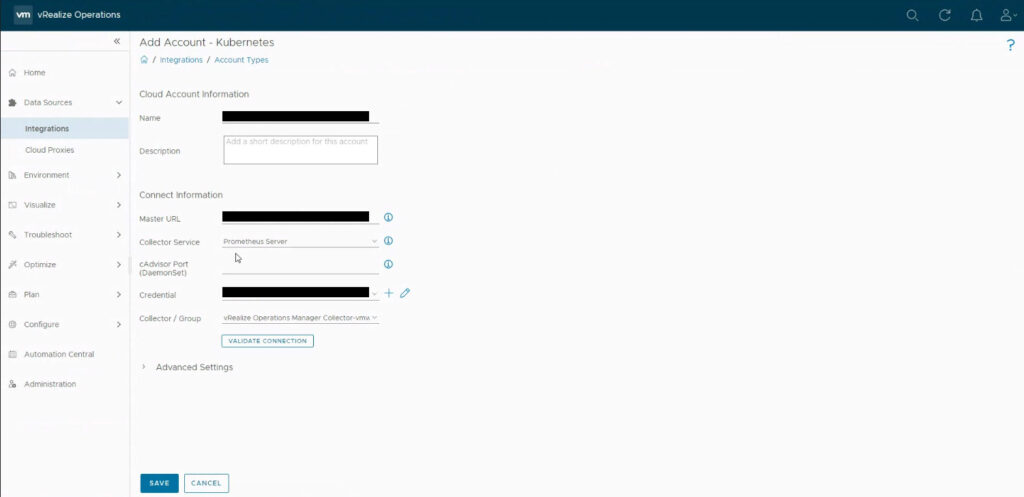

For each Account create a Credential as reported in the picture below. Please note that here the Prometheus Server parameter has to be set with your NGNX FQDN followed by the listen port defined in the server context (in the nginx.config file) for the specific OCP instance to be monitored. Use the following standard syntaxt: <your-nginx-fqdn>:<listen-port-in-nginx-config>/

Once you have the Management Pack configured you should see data start flowing into vROPs.